There are a few more essential skills that we need in our toolbox. In this chapter, we will explore some of the powerful methods of kinematic trajectory motion planning.

In fact, if you ran the clutter clearing demo, I would say that motion planning failures were the biggest limitation of that solution so far: the hand or objects could sometimes collide with the cameras or bins, or the differential-inverse kinematics strategy (which effectively ignored the joint angles) would sometime cause the robot to fold in on itself. In this chapter we'll develop the tools to make that much better!

I do need to make one important caveat. For motion planning in manipulation, lots of emphasis is placed on the problem of avoiding collisions. Despite having done some work in this field myself, I actually really dislike the problem formulation of collision-free motion planning. I think that on the whole, robots are too afraid of bumping into the world (because things still go wrong when they do). I don't think humans are solving these complex geometric problems every time we reach. even when we are reaching in dense clutter. I actually suspect that we are very bad at solving them. I would much rather see robots that perform well even with very coarse / approximate plans for moving through a cluttered environment, that are not afraid to make incidental contacts, and that can still accomplish the task when they do!

The goal of this chapter is to solve for motion trajectories. But I would argue that if you really understand how to solve inverse kinematics, then you've got most of what you need to plan trajectories.

We know that the forward kinematics give us a (nonlinear) mapping from joint angles to e.g. the pose of the gripper: $X^G = f_(q)$. So, naturally, one would think that the problem of inverse kinematics (IK) is about solving for the inverse map, $q = f^_(X^G).$ But, like we did with differential inverse kinematics, I'd like to think about inverse kinematics as the more general problem of finding joint angles subject to a rich library of costs and constraints; and the space of possible kinematic constraints is indeed rich.

For example, when we were evaluating grasp candidates for bin picking, we had only a soft preference on the orientation of the hand relative to some antipodal grasp. In that case, specifying 6 DOF pose of the gripper and finding one set of joint angles which satisfies it exactly would have been an overly constrained specification. I would say that it's rare that we have only end-effector pose constraints to reason about, we almost always have costs or constraints in joint space (like joint limits) and others in Cartesian space (like non-penetration constraints).

We have already discussed the idea of solving differential inverse kinematics as an optimization problem. In that workflow, we started by using the pseudo-inverse of the kinematic Jacobian, but then graduated to thinking about the least-squares formulation of the inverse problem. The more general least-squares setting, we could add additional costs and constraints that would protect us from (nearly) singular Jacobians and could take into account additional constraints from joint limits, joint velocity limits, etc. We could even add collision avoidance constraints. Some of these constraints are quite nonlinear / nonconvex functions of the configuration $q$, but in the differential kinematics setting we were only seeking to find a small change $\Delta q$ around the nominal configuration, so it was quite reasonable to make linear/convex approximations of these nonlinear/nonconvex constraints.

Now we will consider the full formulation, where we try to solve the nonlinear / nonconvex optimization directly, without any constraints on only making a small change to an initial $q$. This is a much harder problem computationally. Using powerful nonlinear optimization solvers like SNOPT, we are often able to solve the problems, even at interactive rates (the example below is quite fun). But there are no guarantees. It could be that a solution exists even if the solver returns "infeasible".

Drake provides a nice InverseKinematics class that makes it easy to assemble many of the standard kinematic/multibody constraints into a MathematicalProgram . Take a minute to look at the constraints that are offered. You can add constraints on the relative position and/or orientation on two bodies, or that two bodies are more than some minimal distance apart (e.g. for non-penetration) or closer than some distance, and more. This is the way that I want you to think about the IK problem; it is an inverse problem, but one with a potentially very rich set of costs and constraints.

Despite the nonconvexity of the problem and nontrivial computational cost of evaluating the constraints, we can often solve it at interactive rates. I've assembled a few examples of this in the chapter notebook:

In the first version, I've added sliders to let you control the desired pose of the end-effector. This is the simple version of the IK problem, amenable to more explicit solutions, but we nevertheless solve it with our full nonlinear optimization IK engine (and it does include joint limit constraints). This demo won't look too different from the very first example in the notes, where you used teleop to command the robot to pick up the red brick. In fact, differential IK offers a fine solution to this problem, too.

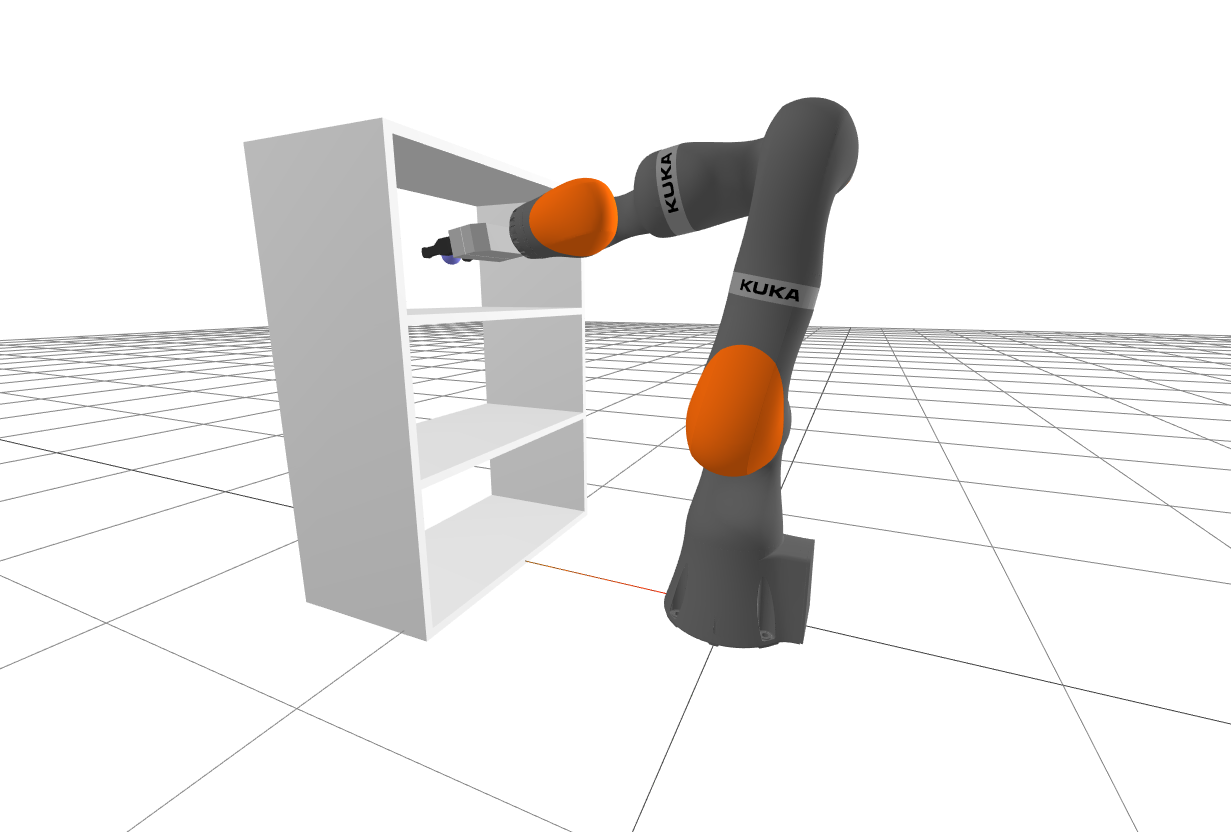

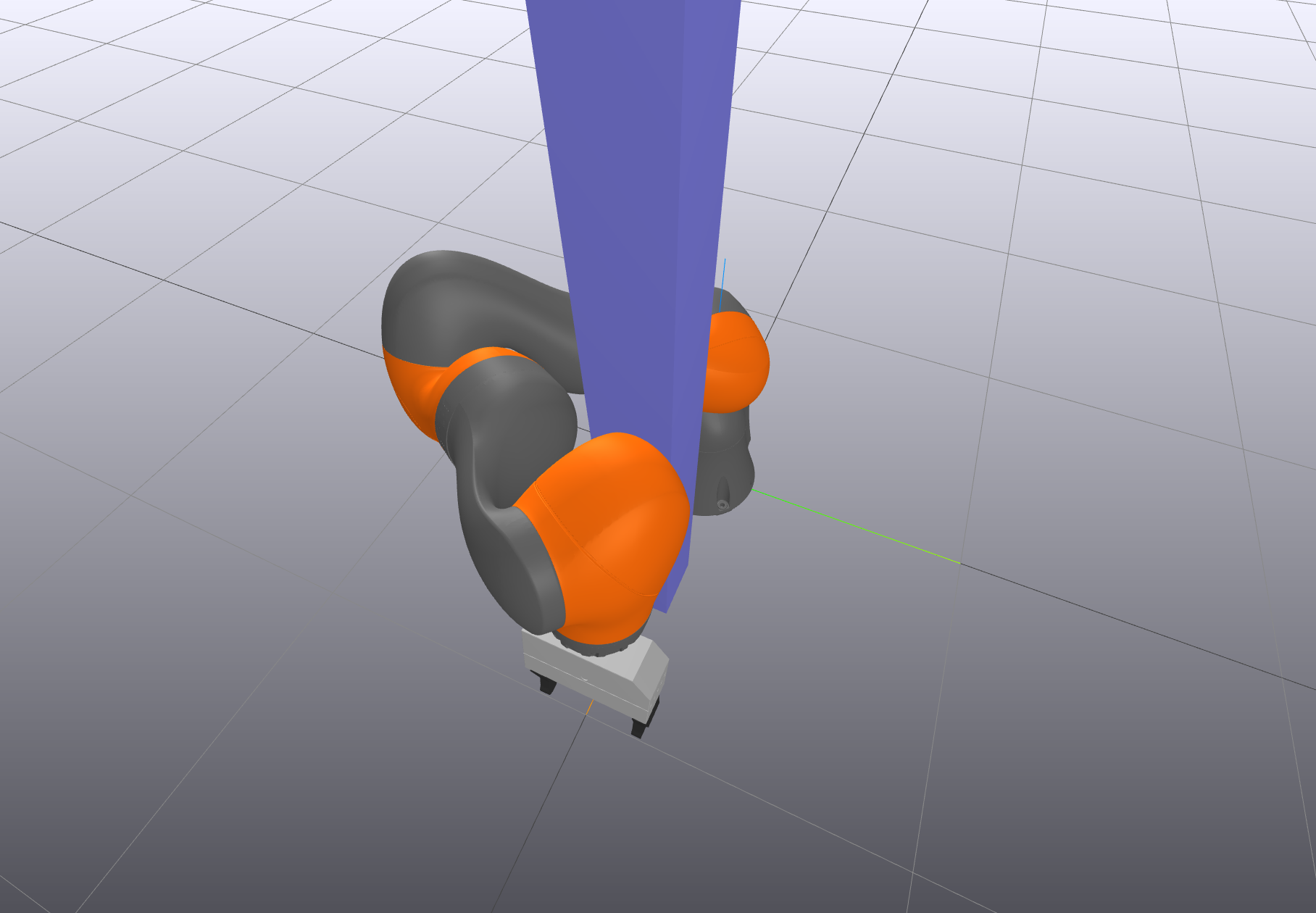

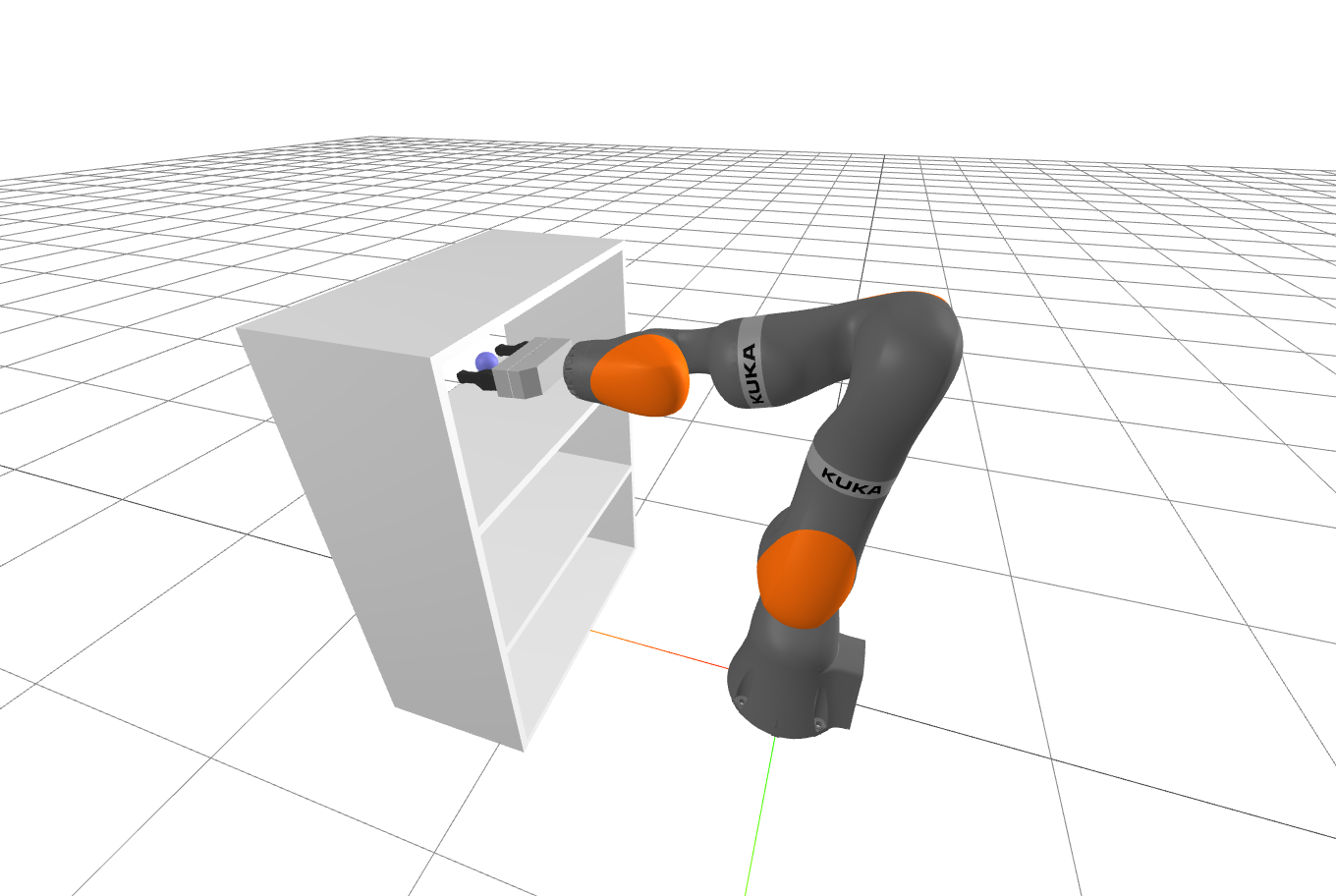

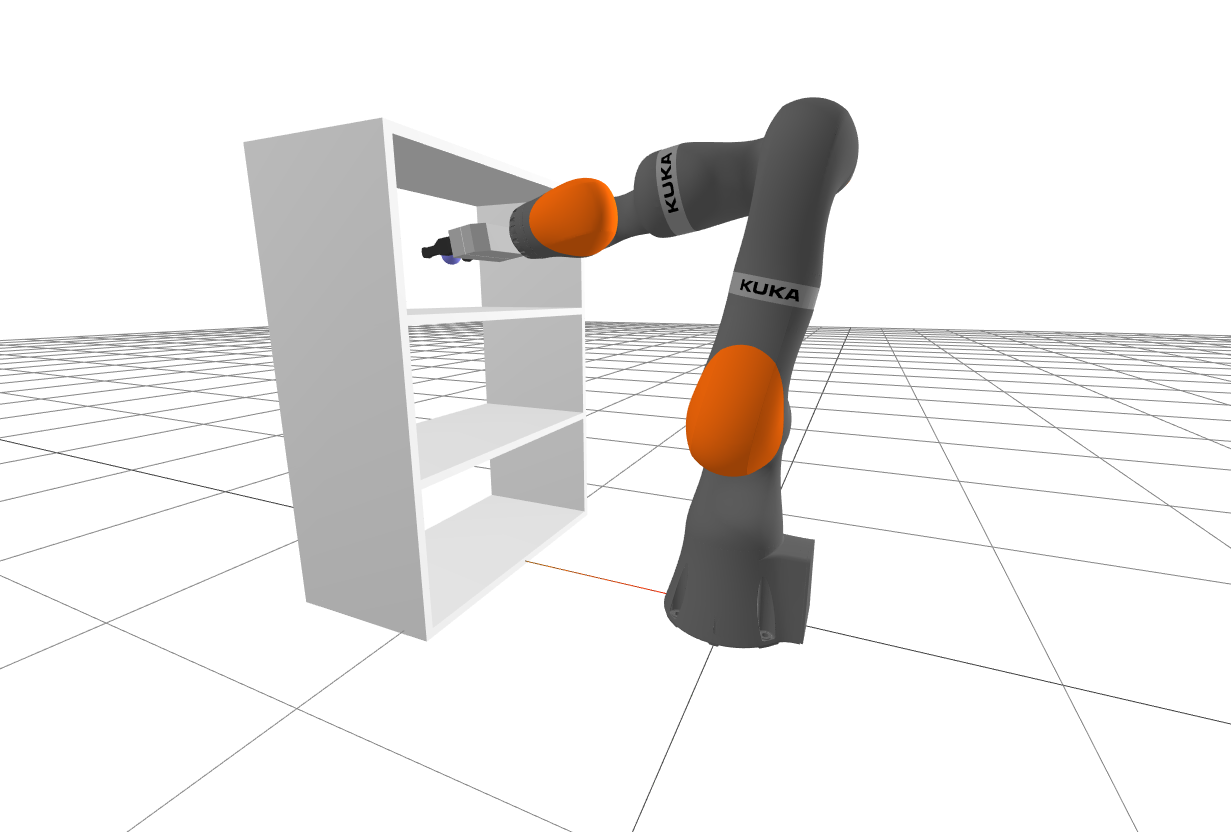

In the second example, I've tried to highlight the differences between the nonlinear IK problem and the differential IK problem by adding an obstacle directly in front of the robot. Because both our differential IK and IK formulations are able to consume the collision-avoidance constraints, both solutions will try to prevent you from crashing the arm into the post. But if you move the target end-effector position from one side of the post to the other, the full IK solver can switch over to a new solution with the arm on the other side of the post, but the differential IK will never be able to make that leap (it will stay on the first side of the post, refusing to allow a collision).

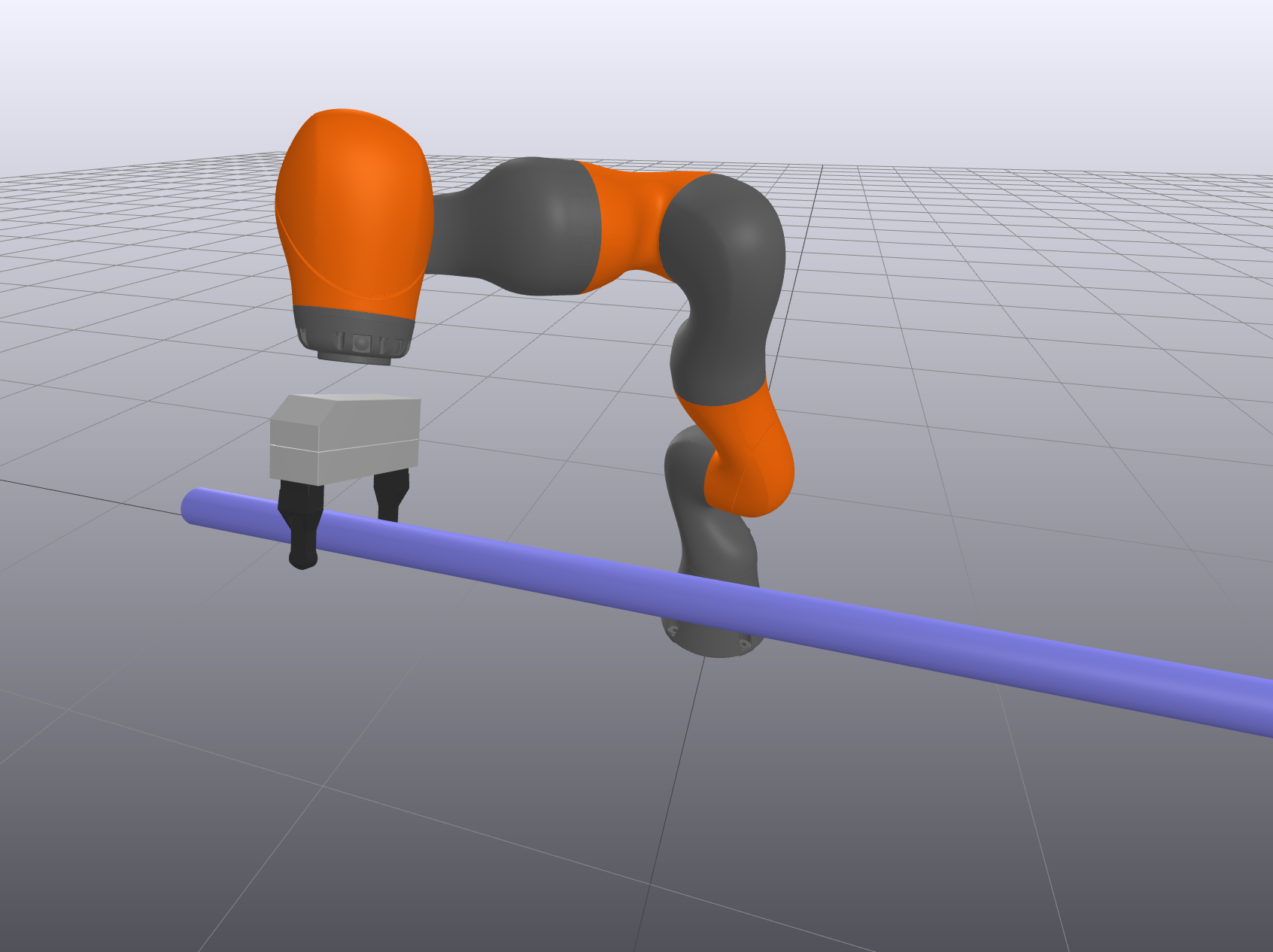

Let's use IK to grasp a cylinder. You can think of it as a hand rail, if you prefer. Suppose it doesn't matter where along the cylinder we grasp, nor the orientation at which we grasp it. Then we should write the IK problem using only the minimal version of those constraints.

In the notebook, I've coded up one version of this. I've put the cylinder's pose on the sliders now, so you can move it around the workspace, and watch how the IK solver decides to position the robot. In particular, if you move the cylinder in $\pm y$, you'll see that the robot doesn't try to follow. until the hand gets to the end of the cylinder. Very nice!

One could imagine multiple ways to implement that constraint. Here's how I did it:

ik.AddPositionConstraint( frameB=gripper_frame, p_BQ=[0, 0.1, -0.02], frameA=cylinder_frame, p_AQ_lower=[0, 0, -0.5], p_AQ_upper=[0, 0, 0.5]) ik.AddPositionConstraint( frameB=gripper_frame, p_BQ=[0, 0.1, 0.02], frameA=cylinder_frame, p_AQ_lower=[0, 0, -0.5], p_AQ_upper=[0, 0, 0.5])In words, I've defined two points in the gripper frame; in the notation of the AddPositionConstraint method they are $<>^Bp^$. Recall the gripper frame is such that $[0, .1, 0]$ is right between the two gripper pads; you should take a moment to make sure you understand where $[0,.1,-0.02]$ and $[0,.1,0.02]$ are. Our constraints require that both of those points should lie exactly on the center line segment of the cylinder. This was a compact way for me to leave the orientation around the cylinder axis as unconstrained, and capture the cylinder position constraints all quite nicely.

We've provided a rich language of constraints for specifying IK problems, including many which involve the kinematics of the robot and the geometry of the robot and the world (e.g., the minimum-distance constraints). Let's take a moment to appreciate the geometric puzzle that we are asking the optimizer to solve.

Let's return to the example of the iiwa reaching into the shelf. This IK problem has two major constraints: 1) we want the center of the target sphere to be in the center of the gripper, and 2) we want the arm to avoid collisions with the shelves. In order to plot these constraints, I've frozen three of the joints on the iiwa, leaving only the three corresponding motion in the $x-z$ plane.

To visualize the constraints, I've sampled a dense grid in the three joint angles of the planarized iiwa, assigning each grid element to 1 if the constraint is satisfied or 0 otherwise, then run a marching cubes algorithm to extract an approximation of the true 3D geometry of this constraint in the configuration space. The "grasp the sphere" constraint produces the nice green geometry I've pictured above on the right; it is clipped by the joint limits. The collision-avoidance constraint, on the other hand, is quite a bit more complicated. To see that, you'd better open up this 3D visualization so you can navigate around it yourself. Scary!

To help you appreciate the problem that we've formulated, I've made a visualization of the optimization landscape. Take a look at the landscape here first; this is only plotting a small region around the returned solution. You can use the Meshcat controls to show/hide each of the individual costs and constraints, to make sure you understand.

You should also take a quick look at the full optimization landscape. This is the same set of curves as in the visualization above, but now it's plotted over the entire domain of joint angles within the joint limits. Nonlinear optimizers like SNOPT can be pretty amazing sometimes!

For unconstrained inverse kinematics with exactly six degrees of freedom, we have closed-form solutions. For the generalized inverse kinematics problem with rich costs and constraints, we've got a nonlinear optimization problem that works well in practice but is subject to local minima (and therefore can fail to find a feasible solution if it exists). If we give up on solving the optimization problem at interactive rates, is there any hope of solving the richer IK formulation robustly? Ideally to global optimality?

This is actually an extremely relevant question. There are many applications of inverse kinematics that work offline and don't have any strict timing requirements. Imagine if you wanted to generate training data to train a neural network to learn your inverse kinematics; this would be a perfect application for global IK. Or if you want to do workspace analysis to see if the robot can reach all of the places it needs to reach in the workspace that you're designing for it, then you'd like to use global IK. Some of the motion planning strategies that we'll study below will also separate their computation into an offline "building" phase to make the online "query" phase much faster.

In my experience, general-purpose global nonlinear solvers -- for instance, based on mixed-integer nonlinear programming (MINLP) approaches or the interval arithmetic used in satisfiability-modulo-theories (SMT) solvers -- typically don't scale the complexity of a full manipulator. But if we only slightly restrict the class of costs and constraints that we support, then we can begin to make progress.

Drake provides an implementation of the GlobalInverseKinematics approach described in Dai17 using mixed-integer convex optimization. The solution times are on the order of a few seconds; it can solve a full constrained bimanual problem in well under a minute. Trutman22 solves the narrow version of the problem (just end-effector poses and joint limits) using convex optimization via a hierarchy of semi-definite programming relaxations; it would be very interesting to understand how well this approach works with the larger family of costs and constraints.

When should we use IK vs Differential IK? IK solves a more global problem, but is not guaranteed to succeed. It is also not guaranteed to make small changes to $q$ as you make small changes in the cost/constraints; so you might end up sending large $\Delta q$ commands to your robot. Use IK when you need to solve the more global problem, and the trajectory optimization algorithms we produce in the next section are the natural extension to producing actual $q$ trajectories. Differential IK works extremely well for incremental motions -- for instance if you are able to design smooth commands in end-effector space and simply track them in joint space.

In our first version of grasp selection using sampling, we put an objective that rewarded grasps that were oriented with the hand grasping from above the object. This was a (sometimes poor) surrogate for the problem that we really wanted to solve: we want the grasp to be achievable given a "comfortable" position of the robot. So a simple and natural extension of our grasp scoring metric would be to solve an inverse kinematics problem for the grasp candidate, and instead of putting costs on the end-effector orientation, we can use the joint-centering cost directly as our objective. Furthermore, if the IK problem returns infeasible, we should reject the sample.

Simple code example here

Once you understand the optimization perspective of inverse kinematics, then you are well on your way to understanding kinematic trajectory optimization. Rather than solving multiple inverse kinematics problems independently, the basic idea now is to solve for a sequence of joint angles simultaneously in a single optimization. Even better, let us define a parameterized joint trajectory, $q_\alpha(t)$, where $\alpha$ are parameters. Then a simple extension to our inverse kinematics problem would be to write something like \begin \min_ & \quad T, \\ \subjto &\quad X^> = f_(q_\alpha(0)),\\ & \quad X^> = f_(q_\alpha(T)), \\ & \quad \forall t , \quad \left|\dot_\alpha(t)\right| \le v_ \label. \end I read this as "find a trajectory, $q_\alpha(t)$ for $t \in [0, T]$, that moves the gripper from the start to the goal in minimum time".

The last equation, (\ref), represents velocity limits; this is the only way we are telling the optimizer that the robot cannot teleport instantaneously from the start to the goal. Apart from this line which looks a little non-standard, it is almost exactly like solving two inverse kinematics problems jointly, except instead of having the solver take gradients with respect to $q$, we will take gradients with respect to $\alpha$. This is easily accomplished using the chain rule.

The interesting question, then, becomes how do we actually parameterize the trajectory $q(t)$ with a parameter vector $\alpha$? These days, you might think that $q_\alpha(t)$ could be a neural network that takes $t$ as an input, offers $q$ as an output, and uses $\alpha$ to represent the weights and biases of the network. Of course you could, but for inputs with a scalar input like this, we often take much simpler and sparser parameterizations, often based on polynomials.

There are many ways one can parameterize a trajectory with polynomials. For example in dynamic motion planning, direct collocation methods use piecewise-cubic polynomials to represent the state trajectory, and the pseudo-spectral methods use Lagrange polynomials. In each case, the choice of basis functions is made so that algorithm can leverage a particular property of the basis. In dynamic motion planning, a great deal of focus is on the integration accuracy of the dynamic equations to ensure that we obtain feasible solutions to the dynamic constraints.

Write up the B-spline math here; and clean up the Drake notation/implementation in the process. In particular, I want to purge the use of symbolic from KinematicTrajectoryOptimization. My `RussTedrake/drake:bsplinebasis_derivatives` branch has a start at getting analytical derivatives for the trajopt workflow.

Note that B-splines are closely related to Bézier curves. But the "B" in "B-spline" actually just stands for "basis" (no, I'm not kidding) and " Bézier splines" are slightly different.

A simple interactive gui for moving around the control points and visualizing the spline.

The default KinematicTrajectoryOptimization class in Drake optimizes a trajectory defined using a B-spline to represent a path, $r(s)$ over the interval $s \in [0,1]$, plus an additional scalar decision variable corresponding to the trajectory duration, $T$. The final trajectory combines the path with the time-rescaling: $q(t) = r(t/T).$ This is a particularly nice way to represent a trajectory of unknown duration, and has the excellent feature that the convex hull property can still be used. Velocity constraints are still linear; constraints on acceleration and higher derivatives do become nonlinear, but if satisfied they still imply strict bounds $\forall t \in [0, T].$

One of the most interesting set of constraints that we can add to our kinematic trajectory optimization problem is the MinimumDistanceLowerBoundConstraint; when the minimum distance between all potential collision pairs is greater than zero then we have avoided collisions. Please note, though, that these collision constraints can only be enforced at discrete samples, $s_i \in [0,1]$, along the path. They do not guarantee that the trajectory is collision free $\forall t\in[0,T].$ It required very special properties of the derivative constraints to leverage the convex hull property; we do not have them for more general nonlinear constraints. A common strategy is to add constraints at some modest number of samples along the interval during optimization, then to check for collisions more densely on the optimized trajectory before executing it on the robot.

As a warm-up, I've provided a simple example of the planar iiwa reaching from the top shelf into the middle shelf.

If you look carefully at the code, I actually had to solve this trajectory optimization twice to get SNOPT to return a good solution (unfortunately, since it is a local optimization, this can happen). For this particular problem, the strategy that worked was to solve once without the collision avoidance constraint, and then use that trajectory as an initial guess for the problem with the collision avoidance constraint.

Another thing to notice in the code is the "visualization callback" that I use to plot a little blue line for the trajectory as the optimizer is solving. Visualization callbacks are implemented by e.g. telling the solver about a cost function that depends on all of the variables, and always returns zero cost; they get called every time the solver evaluates the cost functions. What I've done here can definitely slow down the optimization, but it's an excellent way to get some intuition about when the solver is "struggling" to numerically solve a problem. I think that the people / papers in this field with the fastest and most robust solvers are highly correlated with people who spend time visualizing and massaging the numerics of their solvers.

We can use KinematicTrajectoryOptimization to do the planning for our clutter clearing example, too. This optimization was more robust, and did not require solving twice. I only seeded it with a trivial initial trajectory to avoid solutions where the robot tried to rotate 270 degrees around its base instead of taking the shorter path.

Show example(s) from TRI dish-loading.

There are a number of related approaches to kinematic trajectory optimization in the motion planning literature, which differ either by their parameterization or by the solution technique (or both). Some of the more well-known include CHOMP Ratliff09a , STOMP Kalakrishnan11a , and KOMO Toussaint17 .

KOMO, for instance, is one of a handful of trajectory optimization techniques that use the Augmented Lagrangian method of transcribing a constrained optimization problem into an unconstrained problem, then using a simple but fast gradient-based solver Toussaint14 . Augmented-Lagrangian-based approaches appear to be the most popular and successful these days; I hope to provide a nice implementation in Drake soon! But one does have to be careful in comparing these different solvers -- SNOPT may declare failure if it cannot optimize the cost and satisfy constraints to the default tolerances (around 1e-6), while an Augmented-Lagrangian approach will never report failure and will rarely aim for this level of accuracy.

When kinematic trajectory optimizations succeed, they are an incredibly satisfying solution to the motion planning problem. They give a very natural and expressive language for specifying the desired motion (with needing to sample nonlinear constraints as perhaps the one exception), and they can be solved fast enough for online planning. The only problem is: they don't always succeed. Because they are based on nonconvex optimization, they are susceptible to local minima, and can fail to find a feasible path even when one exists.

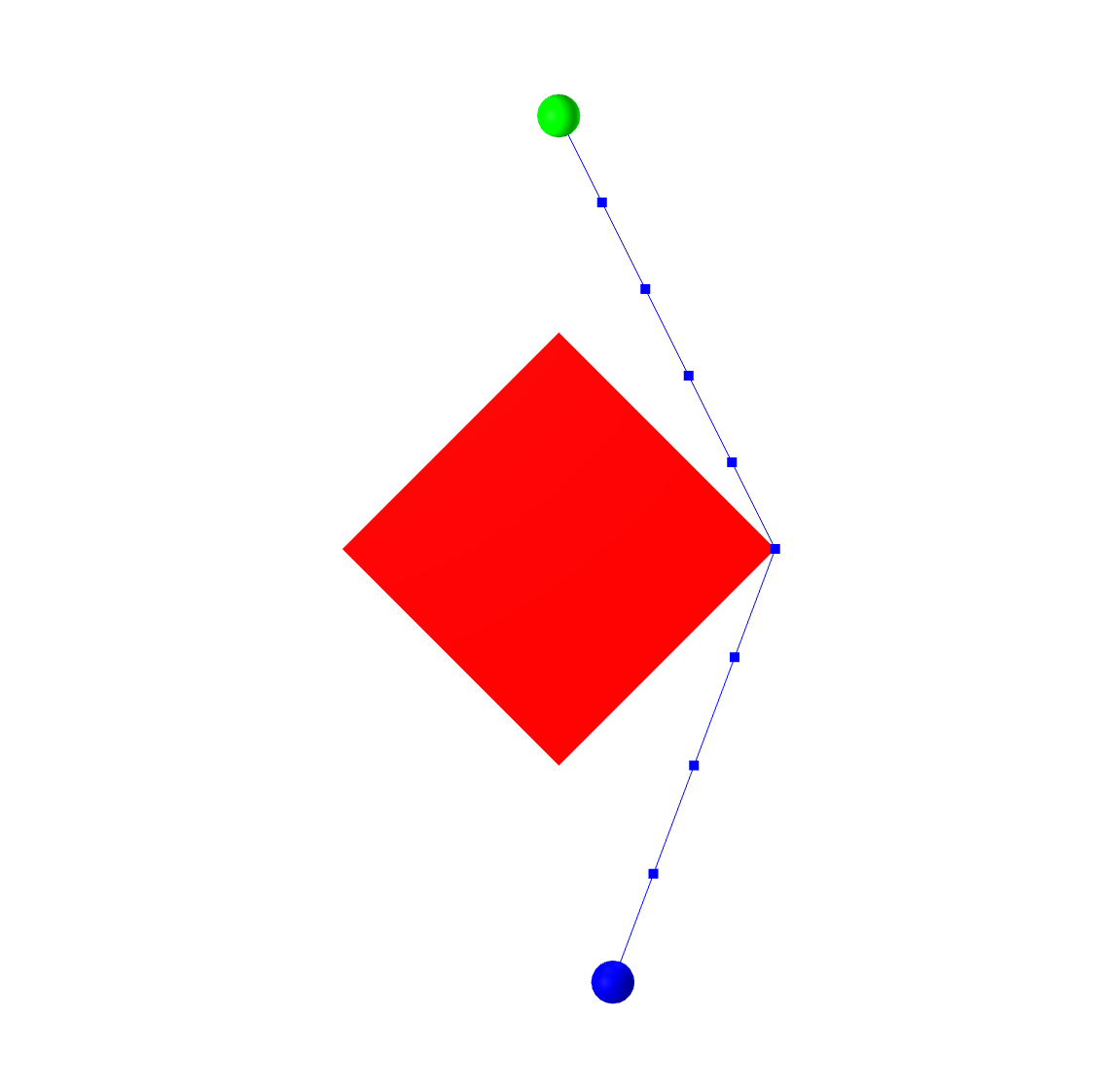

Consider the extremely simple example of finding the shortest path for a point robot moving from the start (blue circle) to the goal (green circle) in the image below, avoiding collisions with the red box. Avoiding even the complexity of B-splines, we can write an optimization of the form: \begin \min_ \quad & \sum_^ | q_ - q_n|_2^2 & \\ \text \quad & q_0 = q_ \\ & q_N = q_ \\ & |q_n|_1 \ge 1 & \forall n, \end where the last line is the collision-avoidance constraint saying that each sample point has to be outside of the $\ell_1$-ball (my convenient choice for the geometry of the red box). Alternatively, we can write a slightly more advanced constraint to maintain that each line segment is outside of the obstacle (rather than just the vertices). Here are some possible solutions to this optimization problem:

Fix meshcat line width.

The solution on the left is the (global) minimum for the problem; the solution on the right is clearly a local minima. Once a nonlinear solver is considering paths that go right around the obstacle, it is very unlikely to find a solution that goes left around the obstacle, because the solution would have to get worse (violate the collision constraint) before it gets better.

Make a version of this with B-splines

To deal with this limitation, the field of collision-free motion planning has trended heavily towards sampling-based methods.

There are many optimizations and heuristics which can dramatically improve the effectiveness of these methods. (optimized collision checking, weighted Euclidean distances, . )

Trajectory optimization techniques allow for rich specifications of costs and constraints, including derivative constraints on continuous curves, and can scale to high-dimensional configuration spaces, but they are fundamentally based on local (gradient-based) optimization and suffer from local mimima. Sampling-based planners reason more globally and provide a notion of (probabilistic) completeness, but struggle to accommodate derivative constraints on continuous curves and struggle in high dimensions. Is there any way to get the best of both worlds?

My students and I have been working on new approach to motion planning that attempts to bridge this gap. It builds on a general optimization framework for mixing continuous optimization and combinatorial optimization (e.g. on a graph), which we call "Graphs of Convex Sets" (GCS).

Add a PRM => GCS plot here.

A relatively small change to the PRM workflow is this: every time we pick a sample, rather than just adding the configuration-space point to the graph, let's expand that point into a (convex) region in configuration space. We'll happily pay some offline computation in order to find nice big regions, because the payoff for the online query phase is significant. First, we can make much sparser graphs -- a small number of regions can cover a big portion of configuration space -- which also allows us to scale to higher dimensions. But perhaps more importantly, now we have the flexibility to optimize online over continuous curves, so long as they stay inside the convex regions. This requires a generalization of the graph-search algorithm which can jointly optimize the discrete path through the graph along with the parameters of the continuous curves; this is exactly the generalization that GCS provides.

The GCS framework was originally introduced in Marcucci21 , and we have a mature implementation in Drake. It is a general optimization framework for combining combinatorial optimizations, like graph search, with continuous optimizations, like the kinematic trajectory optimization we studied above. GCS can provide a continuous extension to any "network flow" optimization (c.f. Ahuja93 ), but for motion planning, the first problem we are interested in is shortest paths on a graph.

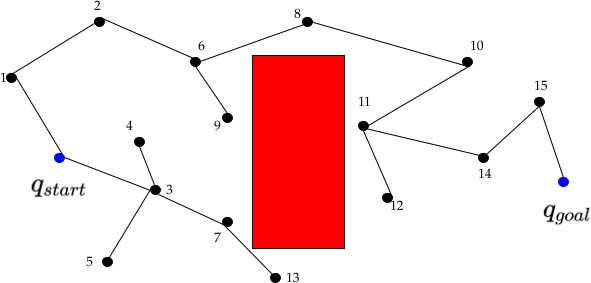

Consider the very classical problem of finding the (weighted) shortest path from a source, $s$, to a target, $t,$ on a graph, pictured on the left. The problem is described by a set of vertices, a set of (potentially directed) edges, and the cost of traversing each edge. GCS provides a simple, but powerful generalization to this problem: whenever we visit a vertex in the graph, we are also allowed to pick one element out of a convex set associated with that vertex. Edge lengths are allowed to be convex functions of the continuous variables in the corresponding sets, and we can also write convex constraints on the edges (which must be satisfied by any solution path).

The shortest path problem on a graph of convex sets can encode problems that are NP-Hard, but these can be formulated as a mixed-integer convex optimization (MICP). What makes the framework so powerful is that this MICP has a very strong and efficient convex relaxation; meaning that if you relax the binary variables to continuous variables you get (nearly) the solution to the original MICP. This means that you can solve GCS problems to global optimality orders of magnitude faster than previous transcriptions. But in practice, we find that solving only the convex relaxation (plus a little rounding) is almost always sufficient to recover the optimal solution. Nowadays, we almost never solve the full MICP in our robotics applications of GCS.

You can find more details and examples about GCS in my underactuated notes, and a thorough and very readable treatment of GCS as a general tool for mixed-discrete and continous optimization in Marcucci24a .

GCS provides a very general machinery for optimizing over the mixed discrete/continuous optimization problems that are naturally represented on a graph. But how do we transcribe the motion planning problem into a GCS? We first presented the transcription I'll describe here in the paper titled "Motion planning around obstacles with convex optimization" Marcucci23a . Since we've already spent time in this chapter talking about the inherent nonconvexity of the motion planning problem, you can see why we like this title! It should be slightly surprising that we can use convex optimization to effectively solve these problems to global optimality.

Let us assume for a moment that we are given a convex decomposition of the collision-free space. This is a strong assumption, which we will justify below.

Observe that if we have two points in the same convex collision-free region, then we know that the straight line connecting them is also guaranteed to be collision free. When points lie in the intersection of two C-space regions, then they can connect continuous paths through multiple regions. This is the essence of our transcription. What this implies is that for each visit to a vertex in the GCS, then we want to pick two points in a C-space region, so that the path lies in the region. So the convex sets in the GCS are not the C-space regions themselves, they are the set with $2n$ variables for an $n$-dimensional configuration space where the first $n$ and the last $n$ are both in the C-space region. In set notation, we'd say it's the Cartesian product of the set with itself. We form undirected edges between these sets iff the C-space regions intersect, and we put a constraint on each edge saying that the second point in the first set must be equal to the first point in the second set.

Let's run GCS trajectory optimization on the simple configuration space depicted above. I've put the start in the bottom left corner and the goal in the top right. I'll run the algorithm twice, once with a minimum-distance objective, and once with a minimum-time objective (and velocity constraints):

We can generalize this significantly. Rather than just putting a line segment in each set, we can use the convex hull property of Bezier curves to guarantee that an entire continuous path of fixed degree lies inside the set. Using the derivative properties of the Bezier curves, we can add convex constraints on the velocities (e.g. velocity limits), and guarantee that the curves are smooth even at the intersections between regions. To define these velocities, though, we need to know something about the rate at which we are traversing the path, so we also introduce the time-scaling parameterization, much like we did in the kinematic trajectory optimization above, with time scaling parameters in each convex set.

This is the result of running nearly the same minimum-time optimization as in the example above, but this time we parameterize each segment with a fifth order Bezier curve and a time scaling, and ask for continuity up to the fourth derivative ("snap").

Inspection of the trajectory derivatives reveals that the velocities are smooth and that they stay within the velocity bounds $[-1, 1]$ for the entire trajectory; not just at sample points.

Nonconvex trajectory optimization can, of course, consume richer costs and constraints, but does not have the global optimization and completeness elements that GCS can provide. We are constantly adding more costs and constraints into the GCS Trajectory Optimization framework -- some seemingly nonconvex constraints actually have an exact convex reformulation, others have nice convex approximations. This leads to a natural merger of the two techniques: we can use convex approximations of more complex costs and constraints in the GCS convex relaxation, but then use nonlinear optimization during in the rounding step to handle the nonconvexity exactly once GCS selects the discrete path through the graph to give a strong initial guess Wrangel24 . This can be accomplished in Drake via the use_in_transcription option of the AddCost and AddConstraint methods of GCS, and adding nonconvex constraints only to the Transcription::kRestriction .

Lots more examples

Ok, so how do we obtain a convex decomposition of collision-free configuration space? Let's start by taking the analogy with the PRM seriously. If we sample a collision-free point in the configuration space, then what is the right way to inflate that point into a convex region?

The answer is simpler when we are dealing with convex geometries. In my view, it is reasonable to approximate the geometry of a robot with convex shapes in the Euclidean space, $\Re^3.$ Even though many robots (like the iiwa) have nonconvex mesh geometries, we can often approximate them nicely as the union of simpler convex geometries; sometimes these are primitives like cylinders and spheres, alternatively we can perform a convex decomposition directly on the mesh. But even when the geometries are convex in the Euclidean space, it is generally unreasonable to treat them as convex in the configuration space.

Let's run the IrisInConfigurationSpace (aka IRIS-NP) algorithm on the example of iiwa reaching into a shelf that we played with before. We will use the full 7 degrees of freedom of the arm, and the original collision geometry. I'd like for this example to demonstrate two things: (1) the mechanics of using IrisInConfigurationSpace and (2) the fact that these IRIS regions can cover a lot of volume, even when they are up against complicated configuration-space geometries.

IrisInConfigurationSpace can be configured using a single seed point; iterations of the algorithm will start with a small region around that point and "inflate" it to maximize a local approximation of configuration space volume. We can find a seed configuration, $q,$ that is reaching inside the top shelf using InverseKinematics . But if we only run IRIS from there, then the region it will find will not fill space inside the shelf, it will instead creep out of the shelf in order to cover the much larger configuration space outside of the shelf. To avoid this, we'll run IRIS twice; once with a seed that is the "home configuration" of the robot, which will grow a region outside the shelf. Then we'll run IRIS again with the IK seed inside the shelf, but with an additional constraint using the first region as an obstacle (asking that they do not overlap). This will force the second region to use the volume inside the shelf to maximize volume.

A different approach for obtaining this volume, which I now favor, is to collect many configuration space samples in the region of interest using inverse kinematics, then compute the MinimumVolumeCircumscribedEllipsoid to be used as the starting_ellipse for IRIS. Then we run only one iteration of IRIS to get a nice region with a notion of volume corresponding to covering this area of configuration space. This approach avoids the false constraint of using previous regions as obstacles (so leads to bigger regions), runs faster (due to using only one iteration of IRIS), and is more parallelizable.

If we were to continue to follow the analogy with the PRM, then we could imagine performing a convex decomposition of the space by sampling at random, rejecting samples that are either in collision or in an existing IRIS region, and then inflating any remaining samples. But in fact, we can do much better than this -- we can cover a larger percentage of the configuration free space with the same number of regions, or alternatively cover a similar volume with less regions. Our current best algorithm for performing this convex decomposition proceeds by computing a "minimum clique cover" of the "visibility graph" Werner23 (implemented in Drake).

If we run the "minimum clique cover" algorithm with a coverage threshold of 96% of the configuration space on the simple 2D example, we obtain automatic decompositions like this:

In practice, it seems reasonably tractable to try to compute a quite dense covering of the configuration space for 7-DOF manipulators, and perhaps even up to say 10 degrees of freedom. But for higher dimensions, I think that trying to cover every nook and cranny of the configuration space is a false goal. I strongly prefer the idea of intializing the clique-cover algorithm with sample points that represent the "important" regions of your configuration space. Sometimes we do this by manual seeding (via calls to inverse kinematics), or alternatively, we can do it by examining trajectories that come from teleop demonstrations, from another planner (that can generate plans, but without the same guarantees), or even from a reinforcement learning policy.

Here is a notebook that contains a handful of different tools for steering the creation of your IRIS regions.

Fast path planning (FPP) Marcucci23 . Many motion planning instances don't need the full power of GCS; heuristic approximations can often find very good solutions. Rather than solving the full GCS problem, considering the discrete and continuous variables simultaneously, FPP alternates between solving the discrete and continuous problems to find a local minima. FPP is (typically) faster than GcsTrajectoryOptimization , and by virtue of using alternations, it can handle constraints on the derivatives (e.g. acceleration constraints) which GcsTrajectoryOptimization cannot handle. However, FPP is more limited on the class of convex sets/constraints that it can support. (Drake implementation coming soon!)

GCS on a manifold including for mobile manipulation Cohn23 , and for bimanual manipulation Cohn23a .

GCS with dynamic constraints. For discrete-time systems described by piecewise-affine dynamics and convex objectives and constraints, GCS provides the strongest formulations to date Marcucci21 . It is also possible to reason more directly about continuous dynamic constraints over continuous curves (more coming soon!).

Planning through contact. Amazingly, we now have tight convex relaxations for quasi-static manipulation with contact Graesdal24 . The initial examples are quite simple (so that we could study the problem carefully, and defer the details of scaling the solver), but you can expect much more from us in this direction very soon.

Planning under uncertainty (coming soon!).

Task and motion planning (coming soon!). Kurtz23 showed a natural transcription from temporal logic to GCS.

GCS as a (feedback) policy (coming soon!).

Finish these! Boyd's paper a bit more clear about the writing of the convex objective. Tobia said he also has some notes on the higher derivatives.

Once we have a motion plan, a natural question is: what is the fastest that we can execute that plan, subject to velocity, acceleration, and torque-limit constraints?

0,$ where $h'(s) = \pd.$ We'll also use the shorthand $h''(s) = \pd,$ etc. The advantage of this parameterization is that when $(s)$ is fixed, many of the objectives and constraints that we might want to put on $\bq(t)$ are actually convex constraints on $h(s).$ To see this, write \begin '(s) &= \dot\bq(t) h'(s),\\ ''(s) &= \ddot(t)h'(s)^2 + \dot(t)h''(s).\end

One common question is whether TOPP can be used to write convex constraints on the jerk (or higher derivatives) of the trajectory, $$\dddot\bq(t) = '''(s) \dot^3(t) + 3''(s) \dot(t) \ddot(t) + '(s)\dddot(t).$$ This comes up because many industrial robot manipulators have jerk limits that must be respected. Debrouwere13 addressed a version of this question. (More soon. )

For this exercise, you will implement a optimization-based inverse kinematics solver to open a cupboard door. You will work exclusively in . You will be asked to complete the following steps:

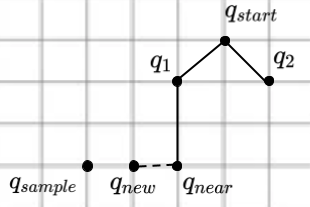

For this exercise, you will implement and analyze the RRT algorithm introduced in class. You will work exclusively in . You will be asked to complete the following steps:

Due to the random process by which nodes are generated, the paths output by RRT can often look somewhat jerky (the "RRT dance" is the favorite dance move of many roboticists). There are many strategies to improve the quality of the paths and in this question we'll explore two. For the sake of this problem, we'll assume path quality refers to path length, i.e. that the goal is to find the shortest possible path, where distance is measured as Euclidean distance in joint space.

Name one pair of nodes for which the shortcutting algorithm would result in a shorter path (i.e. two nodes along our current solution path for which we could produce a shorter path if we were to directly connect them). You should assume the distance metric is the 2D Euclidean distance.

Name one pair of nodes for which the shortcutting algorithm would result in a shorter path (i.e. two nodes along our current solution path for which we could produce a shorter path if we were to directly connect them). You should assume the distance metric is the 2D Euclidean distance.

For this exercise, you will implement part of the IRIS algorithm Deits14 , which is used to compute large regions of obstacle-free space through a series of convex optimizations. These regions can be used by various planning methods that search for trajectories from start to goal while remaining collision-free. You will work exclusively in . You will be asked to complete the following steps:

| Accessibility | © Russ Tedrake, 2024 |